As AI becomes more embedded in our daily tools and decisions, the ethics of AI are no longer a theoretical concern, they’re a practical one every business owner must understand.

Artificial Intelligence is no longer science fiction. It’s writing emails, answering support tickets, driving marketing campaigns, and even recommending who gets hired or approved for a loan.

But with that power comes a serious responsibility: Are we building something helpful, or something harmful?

We believe technology should serve people, not replace or manipulate them. We break down the ethics of AI in a clear, actionable way for small business owners, professionals, and anyone curious about using AI responsibly.

What Is AI, Really?

AI is a set of algorithms trained on massive amounts of data. It detects patterns, makes predictions, and generates content or decisions. But AI doesn’t think, feel, or have values. It calculates.

That distinction matters when discussing ethics.

5 Ethical Questions Around AI That You Should Understand

1. Can AI Be Biased?

Yes — and it already is.

- AI learns from data, and if that data reflects social bias, the AI will reflect it too.

- A resume filter might favor male candidates if past hires were mostly male.

- A loan tool might disqualify applicants from marginalized communities.

- A facial recognition system might misidentify people of color at higher rates.

What to do: Use AI tools that are transparent about their training data and reviewed for fairness. Bias in, bias out.

2. Should AI Replace Humans?

Automation is helpful, but replacing people raises ethical questions.

- A chatbot can answer FAQs, but should it deliver bad news to a customer?

- AI can write reports, but should it make hiring or firing decisions?

Use AI to assist, not erase. The best uses of AI amplify human skills rather than eliminating them. Before integrating AI into your operations, it’s essential to assess your business’s readiness. Our guide, How to Know If Your Business Is Ready for AI, offers a comprehensive checklist to help you evaluate your preparedness.

3. Is AI Manipulating Us?

Some AI systems are optimized for engagement, not truth or well-being. That leads to:

- Sensational news feeds

- Clickbait headlines

- Addictive app designs

AI can manipulate behavior by reinforcing what you already believe or nudging decisions without your full awareness.

Ethical AI design prioritizes transparency and user control over manipulation.

4. Can AI Fake Emotions?

Yes. AI can mimic empathy, friendliness, and even sadness. But it doesn’t feel any of those things.

- Chatbots can sound supportive

- AI avatars can simulate compassion

- Virtual assistants can “care”

This emotional mimicry can build trust, but also mislead. We should be clear when users are talking to machines, not humans.

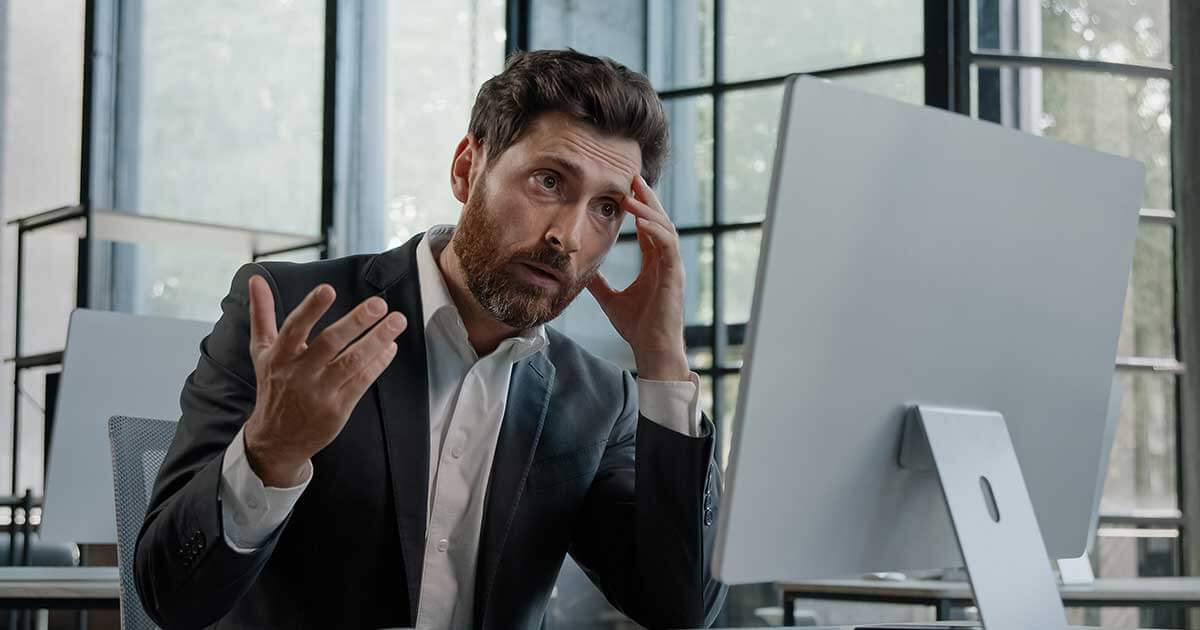

5. Who’s Responsible When AI Fails?

AI doesn’t take responsibility. So when something goes wrong:

- Who’s accountable? The developer? The user? The company?

- Who gets hurt? Customers? Employees? Entire communities?

There are no clear answers yet in law or policy. That means businesses must set their own standards of responsibility.

How to Use AI Ethically in Your Business

- Be transparent: Let customers know when they’re interacting with AI.

- Avoid deception: Don’t design AI to trick people into thinking it’s human.

- Check for bias: Choose tools with fair and well-audited data practices.

- Respect privacy: Don’t share sensitive info with generative tools unless you’re sure it’s secure.

- Keep a human in the loop: Use AI to support decisions, not make them unilaterally.

Final Thought: AI Needs Human Ethics

If we want a future where AI helps rather than harms, we need to design, deploy, and supervise it with care. That starts with asking questions, staying informed, and never handing off responsibility to a machine.

Want help integrating AI into your business the right way?

We help small businesses use technology to grow ethically and effectively.