If you have been watching AI headlines lately, you have probably noticed a pattern: almost every country wants the benefits of AI, and almost every country is

That tension is exactly why the India AI Impact Summit in New Delhi matters.

The summit runs February 16–20, 2026, and it is drawing a rare mix of heads of state and top AI company leaders. It is also being framed as the first major global AI summit hosted in the Global South, which changes the politics of who gets to shape the rules.

I am going to walk you through the practical ways this summit could influence global AI policy, even if it does not produce a binding treaty.

Why this summit is different

Most global AI gatherings have been hosted by wealthy countries and have naturally reflected their priorities: frontier model safety, national security, and regulating powerful tech firms.

India is coming in with a different framing. Official Indian government materials say the summit is anchored in three “Sutras” or guiding themes: People, Planet, Progress. In plain language, that reads like: make AI useful for humans, sustainable, and tied to real economic outcomes.

- That matters because global AI policy is often a tug-of-war between:

- Risk control (testing, transparency, liability, security)

- Economic competition (who builds, who deploys, who benefits)

- Inclusion (languages, affordability, access to computing, workforce impact)

India is trying to make inclusion and development central, not a side note.

The summit is likely to set “soft law” that hardens later

According to the Associated Press, the summit is not expected to produce a binding political agreement, but it may conclude with a non-binding pledge or “New Delhi Declaration.”

A non-binding declaration can still shape policy in real ways because it often becomes “soft law”:

- Governments reuse the language in their own national AI laws.

- Regulators cite it when defining what “reasonable safety” looks like.

- Companies adopt it as a baseline to avoid being shut out of markets.

So when the New Delhi Declaration lands, the details will matter more than the headlines. Vague principles are easy to sign. Specific commitments are where policy starts to move.

The “working groups” approach can produce templates other countries copy

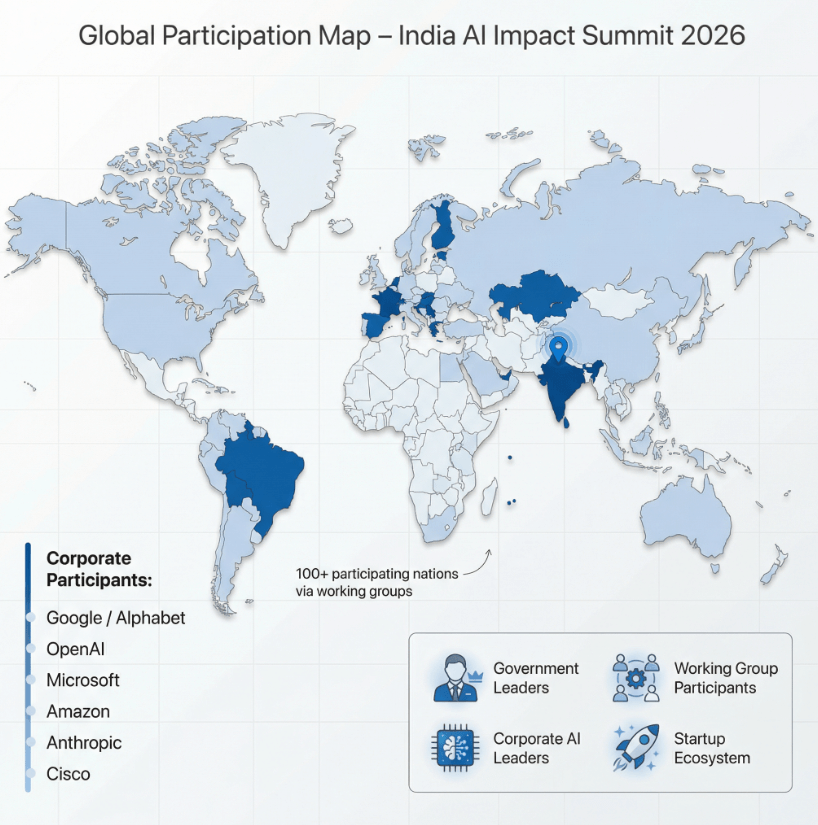

India’s government describes Seven Chakras, basically working groups, with over 100 countries engaging through them.

This is one of the most important and least glamorous parts of global governance. Working groups are where people create:

- model evaluation checklists

- incident reporting expectations

- procurement guidelines for government use of AI

- frameworks for responsible deployment in sensitive areas

If these outputs are practical, other governments copy them because it saves time. Copying turns into standardization, and standardization turns into global policy pressure.

India’s market scale gives it leverage in global rulemaking

Reuters reports that India is becoming a major center of AI adoption and infrastructure investment, with big commitments in cloud and AI infrastructure from major firms.

Why does that matter for governance? Because large markets shape product behavior. If India pushes for specific guardrails, transparency requirements, or language coverage, companies pay attention. They do not want to be locked out of a fast-growing user base.

This is one of the quiet drivers of global policy: market access is often more persuasive than speeches.

The attendee list signals where power is consolidating

On the government side, AP reports participation by about 20 leaders, including France’s Emmanuel Macron and Brazil’s Luiz Inácio Lula da Silva, with Prime Minister Narendra Modi attending the summit.

On the company side, Reuters reports attendance by leaders from major AI players, including Google (Alphabet), OpenAI, and Anthropic, among others.

This matters because global AI policy is not just written by governments. In practice, it is negotiated between governments and the handful of companies that control frontier models, compute access, and deployment channels. A summit that brings them together can accelerate alignment on baseline expectations.

It could shift the center of gravity toward “AI that gets deployed,” not just AI that gets built

One of the most interesting signals from Reuters is that India is leaning into large-scale deployment and applications, even as it acknowledges it does not lead the world in training the largest frontier models.

That can shape global policy priorities. Here is what changes when deployment becomes the center:

- more focus on AI in public services, education, health, and small business

- more focus on multilingual access and local context

- more focus on real-world harms like scams, misinformation, and unsafe advice

- more focus on procurement standards and audits, not just research lab safety

In other words, policy moves closer to where everyday people actually encounter AI.

One caution: credibility and execution matter

Reuters also reported that the summit’s opening day faced serious logistical problems like long queues and confusion.

That might sound unrelated to governance, but it is not. Global policy is built on trust and competence. When a country wants to lead a global governance conversation, smooth execution helps reinforce the message.

What I am watching for in the final outcomes

If you want to understand whether this summit truly shaped global AI policy, here are the signals that matter:

- Specific language in the New Delhi Declaration

Look for concrete items like evaluation expectations, incident reporting norms, or shared principles on accountability. - Exportable working-group deliverables

If the Seven Chakras publish practical templates, other governments will reuse them. - Commitments that reduce the AI divide

Watch for real commitments around access to compute, multilingual models, and skills training, especially for countries that are usually policy takers rather than policy makers. - Company commitments that can be tracked

Voluntary promises matter more when they are measurable, such as publishing safety reports, participating in audits, or deploying safeguards tied to elections or child safety.